Optimizer#

The OptimizerBase provides a unified interface for using external optimization libraries.

It is responsible for converting the OptimizationProblem to the specific API of the external optimizer.

Currently, adapters to Scipy's optimization suite [4], Pymoo [6] and Ax [7] are implemented, all of which are published under open source licenses that allow for academic as well as commercial use.

Before the optimization can be run, the optimizer needs to be initialized and configured. All implementations share the following options:

progress_frequency: Number of generations after which optimizer reports progress.n_cores: The number of cores that the optimizer should use.cv_tol: Tolerance for constraint violation.similarity_tol: Tolerance for individuals to be considered similar.

The individual optimizer implementations provide additional options, including options for termination criteria.

For this example, U_NSGA3 is used, a genetic algorithm [8].

It has the following additional options:

All options can be displayed the following way:

from CADETProcess.optimization import U_NSGA3

optimizer = U_NSGA3()

print(optimizer.options)

{'progress_frequency': 1, 'x_tol': 1e-08, 'f_tol': 0.0025, 'cv_tol': 1e-06, 'similarity_tol': None, 'n_max_iter': None, 'n_max_evals': 100000, 'seed': 12345, 'pop_size': None, 'xtol': 1e-08, 'ftol': 0.0025, 'cvtol': 1e-06, 'n_max_gen': None, 'n_skip': 0}

For more information, refer to the reference of the individual implementations (see optimization).

To start the optimization, the OptimizationProblem needs to be passed to the optimize() method.

Note that before running the optimization, a check method is called to verify that checks whether the given optimization problem is configured correctly and supported by the optimizer.

Consider this bi-objective function:

Note, by default, results are stored to file.

To disable this, set save_results=False.

Show code cell source

from CADETProcess.optimization import OptimizationProblem

def multi_objective_func(x):

f1 = x[0]**2 + x[1]**2

f2 = (x[0] - 1)**2 + x[1]**2

return f1, f2

optimization_problem = OptimizationProblem('moo')

optimization_problem.add_variable('x_0', lb=-5, ub=5)

optimization_problem.add_variable('x_1', lb=-5, ub=5)

optimization_problem.add_objective(multi_objective_func, n_objectives=2)

optimizer.n_cores = 4

optimization_results = optimizer.optimize(optimization_problem, save_results=False)

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[4], line 1

----> 1 optimizer.n_cores = 4

2 optimization_results = optimizer.optimize(optimization_problem, save_results=False)

File ~/checkouts/readthedocs.org/user_builds/cadet-process/conda/latest/lib/python3.11/site-packages/CADETProcess/optimization/optimizer.py:641, in OptimizerBase.n_cores(self, n_cores)

639 @n_cores.setter

640 def n_cores(self, n_cores):

--> 641 self.parallelization_backend.n_cores = n_cores

File ~/checkouts/readthedocs.org/user_builds/cadet-process/conda/latest/lib/python3.11/site-packages/CADETProcess/dataStructure/parameter.py:171, in ParameterBase.__set__(self, instance, value)

169 if value is not None:

170 value = self._prepare(instance, value, recursive=True)

--> 171 self._check(instance, value, recursive=True)

173 try:

174 if self.name in instance._parameters:

File ~/checkouts/readthedocs.org/user_builds/cadet-process/conda/latest/lib/python3.11/site-packages/CADETProcess/dataStructure/parameter.py:483, in Typed._check(self, instance, value, recursive)

480 raise TypeError(f"Expected type {self.ty}, got {type(value)}")

482 if recursive:

--> 483 super()._check(instance, value, recursive)

File ~/checkouts/readthedocs.org/user_builds/cadet-process/conda/latest/lib/python3.11/site-packages/CADETProcess/dataStructure/parameter.py:770, in Ranged._check(self, instance, value, recursive)

756 def _check(self, instance, value, recursive=False):

757 """

758 Validate the value against the range.

759

(...)

768

769 """

--> 770 self.check_range(value)

772 if recursive:

773 super()._check(instance, value, recursive)

File ~/checkouts/readthedocs.org/user_builds/cadet-process/conda/latest/lib/python3.11/site-packages/CADETProcess/dataStructure/parameter.py:754, in Ranged.check_range(self, value)

752 raise ValueError(f"Value {value} is below the lower bound of {self.lb}")

753 elif self.ub_op(value, self.ub):

--> 754 raise ValueError(f"Value {value} is above the upper bound of {self.ub}")

ValueError: Value 4 is above the upper bound of 2

Initial values#

By default, the optimizer automatically tries to generate initial values using the create_initial_values() method provided by the OptimizationProblem (see Initial Values).

To manually specify initial values, pass x0 to the method.

optimization_results = optimizer.optimize(

optimization_problem,

x0=[[0, 1], [1,2]]

)

Note

If the optimizer requires additional starting values beyond the ones provided, it will generate new individuals automatically. Conversely, if more individuals are provided than necessary, the optimizer will ignore the excess values.

Checkpoint#

CADET-Process automatically stores checkpoints so that an optimization can be interrupted and restarted.

To load from an existing file, set use_checkpoint=True.

optimization_results = optimizer.optimize(

optimization_problem,

use_checkpoint=True

)

Optimization Results#

The OptimizationResults object contains the results of the optimization.

This includes:

exit_flag: Information about the optimizer termination.exit_message: Additional information about the optimiz status.n_evals: Number of evaluations.x: Optimal points.f: Optimal objective values.g: Optimal nonlinear constraint values.

Moreover, multiple plot methods are provided to visualize the results.

The plot_objectives() method shows the values of all objectives as a function of the input variables using a colormap where later generations are plotted with darker blueish colors.

Invalid points, i.e. points where nonlinear constraints are not fulfilled, are also plotted using reddish colors, where also darker shades represent later generations.

optimization_results.plot_objectives()

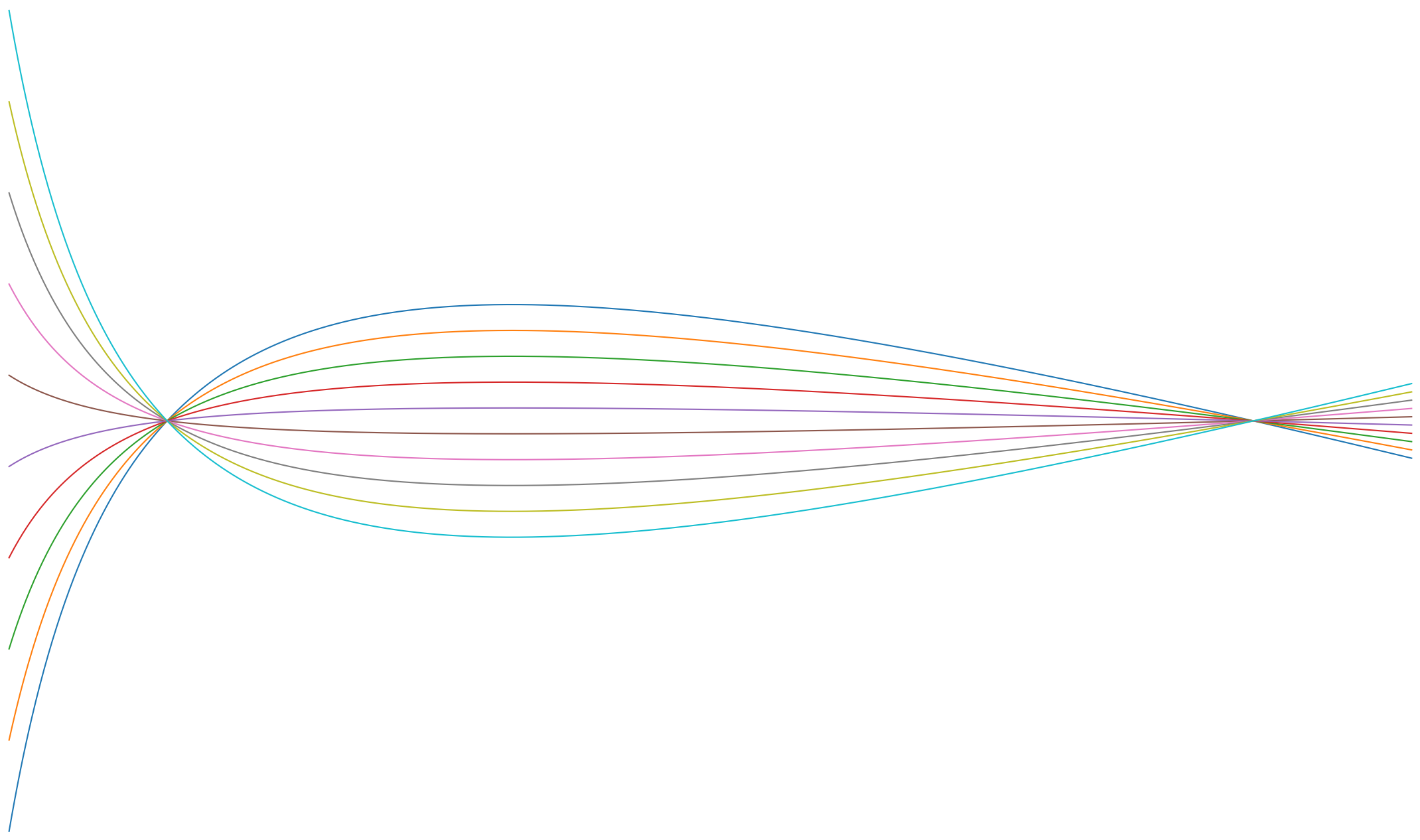

The plot_pareto() method shows a pairwise Pareto plot, where each objective is plotted against every other objective in a scatter plot, allowing for a visualization of the trade-offs between the objectives.

optimization_results.plot_pareto()

The plot_convergence() method is a tool for visualizing the convergence of the optimization over time, where the objective value is plotted against the number of function evaluations.

_ = optimization_results.plot_convergence()

The plot_corner() method plots each evaluated variable value against every other variable in a set of scatter plots.

The corner plot is particularly useful when exploring high-dimensional data or parameter spaces, as it allows us to identify correlations and dependencies between variables, and to visualize the marginal distributions of each variable.

It is also useful when we want to compare the distribution of variables across different subsets of the data or parameter space.

optimization_results.plot_corner()